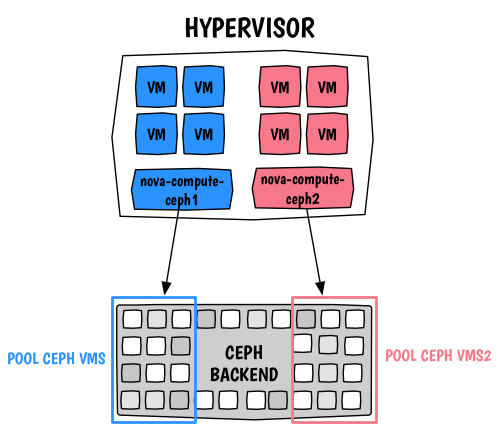

OpenStack Nova: 在单个超visor上配置多个Ceph后端

配置一个Nova超visor,使用多个后端存储实例的根临时磁盘。

I. 理由

这里的核心思想是能够正确利用超visor上的资源。目前,Nova不支持多个image_type后端,这意味着来自单个计算节点的实例的根临时磁盘不同。这有点像拥有类似于Cinder的多后端支持,我们可以分配类型。由于我不想为每个后端拥有专用的计算节点,我不得不进行一些调查,看看是否能找到合适的解决方案。该解决方案可行,但有点非常规,但是我认为对于具有有限数量的计算节点和各种用例的任何人来说,它都将很有用。

我想到的一种用例是在根临时磁盘上设置不同的QoS功能,但是我不希望受到每个超visor的限制。您可以决定提供不同的Ceph后端(具有不同的速度等)。

II. 配置Ceph

首先我们需要准备Ceph集群,为此我们将创建两个不同的池。

$ ceph osd pool create vms 128

$ ceph osd pool create vms2 128

最终,我们创建一个具有对这两个池的写入访问权限的密钥

$ ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rwx pool=vms2, allow rx pool=images'

III. 配置OpenStack Nova

III.1. Nova.conf ¶

由于我们将使用主机聚合,我们需要特殊的调度器过滤器,因此配置您的Nova调度器

scheduler_default_filters=RetryFilter,AvailabilityZoneFilter,RamFilter,ComputeFilter,ImagePropertiesFilter,ServerGroupAntiAffinityFilter,ServerGroupAffinityFilter,AggregateInstanceExtraSpecsFilter

现在我们将使用两个Nova配置文件。在同一超visor上运行这两个Nova实例的唯一方法是使用不同的host值。因此,这两个实例不会引用真实的节点,而更多的是逻辑实体。但是,这两个实例需要被您的DNS实例或通过OpenStack控制器的/etc/hosts文件知道。

我们的第一个nova-compute进程将具有以下nova-ceph1.conf

[DEFAULT]

host = compute-ceph1

[libvirt]

images_rbd_ceph_conf = /etc/ceph/ceph.conf

images_rbd_pool = vms

images_type = rbd

我们的第一个nova-compute进程将具有以下nova-ceph2.conf

[DEFAULT]

host = compute-ceph2

[libvirt]

images_rbd_ceph_conf = /etc/ceph/ceph.conf

images_rbd_pool = vms2

images_type = rbd

现在您需要像这样运行您的nova进程

$ nova-compute --config-file /etc/nova/nova-ceph1.conf --config-file /etc/nova/nova-ceph2.conf

如果您使用的是systemd,可以使用以下单元文件

[Unit]

Description=OpenStack Nova Compute Server

After=syslog.target network.target

[Service]

Environment=LIBGUESTFS_ATTACH_METHOD=appliance

Type=notify

Restart=always

User=nova

ExecStart=/usr/bin/nova-compute --config-file /etc/nova/nova-ceph1.conf --config-file /etc/nova/nova-ceph2.conf

[Install]

WantedBy=multi-user.target

验证它是否按预期工作

$ nova service-list

+----+----------------+---------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+----------------+---------------+----------+---------+-------+----------------------------+-----------------+

| 1 | nova-conductor | deventer | internal | enabled | up | 2015-09-14T09:55:09.000000 | - |

| 2 | nova-cert | deventer | internal | enabled | up | 2015-09-14T09:55:18.000000 | - |

| 3 | nova-network | deventer | internal | enabled | up | 2015-09-14T09:55:11.000000 | - |

| 4 | nova-scheduler | deventer | internal | enabled | up | 2015-09-14T09:55:16.000000 | - |

| 6 | nova-compute | compute-ceph1 | nova | enabled | up | 2015-09-14T09:55:12.000000 | - |

| 7 | nova-compute | compute-ceph2 | nova | enabled | up | 2015-09-14T09:55:17.000000 | - |

+----+----------------+---------------+----------+---------+-------+----------------------------+-----------------+

我快速(故意)跳过了Nova配置的某些部分,因此像libvirt secret这样的事情在这里没有解释。如果您不熟悉配置,请阅读官方文档。

III.2. 主机聚合 ¶

现在我们需要使用主机聚合逻辑地分离这两个超visor。为此,我们将创建两个聚合

$ nova aggregate-create ceph-compute-storage1

+----+-----------------------+-------------------+-------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-----------------------+-------------------+-------+----------+

| 1 | ceph-compute-storage1 | - | | |

+----+-----------------------+-------------------+-------+----------+

$ nova aggregate-create ceph-compute-storage2

+----+-----------------------+-------------------+-------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-----------------------+-------------------+-------+----------+

| 2 | ceph-compute-storage2 | - | | |

+----+-----------------------+-------------------+-------+----------+

现在我们将超visor添加到各自的聚合中

$ nova aggregate-add-host ceph-compute-storage1 compute-ceph1

Host compute-ceph1 has been successfully added for aggregate 1

+----+-----------------------+-------------------+-----------------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-----------------------+-------------------+-----------------+----------+

| 1 | ceph-compute-storage1 | - | 'compute-ceph1' | |

+----+-----------------------+-------------------+-----------------+----------+

$ nova aggregate-add-host ceph-compute-storage2 compute-ceph2

Host compute-ceph2 has been successfully added for aggregate 2

+----+-----------------------+-------------------+-----------------+----------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-----------------------+-------------------+-----------------+----------+

| 2 | ceph-compute-storage2 | - | 'compute-ceph2' | |

+----+-----------------------+-------------------+-----------------+----------+

最后,我们设置一个特殊的元数据,稍后我们的Nova flavor会识别它

$ nova aggregate-set-metadata 1 cephcomputestorage1=true

Metadata has been successfully updated for aggregate 1.

+----+-----------------------+-------------------+-----------------+----------------------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-----------------------+-------------------+-----------------+----------------------------+

| 1 | ceph-compute-storage1 | - | 'compute-ceph1' | 'cephcomputestorage1=true' |

+----+-----------------------+-------------------+-----------------+----------------------------+

$ nova aggregate-set-metadata 2 cephcomputestorage2=true

Metadata has been successfully updated for aggregate 2.

+----+-----------------------+-------------------+-----------------+----------------------------+

| Id | Name | Availability Zone | Hosts | Metadata |

+----+-----------------------+-------------------+-----------------+----------------------------+

| 2 | ceph-compute-storage2 | - | 'compute-ceph2' | 'cephcomputestorage2=true' |

+----+-----------------------+-------------------+-----------------+----------------------------+

III.3. Flavors ¶

最后一步是创建新的flavor,以便我们可以决定在哪个逻辑超visor(以及哪个Ceph池上)上运行我们的实例

$ nova flavor-create m1.ceph-compute-storage1 8 128 40 1

+----+--------------------------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+--------------------------+-----------+------+-----------+------+-------+-------------+-----------+

| 8 | m1.ceph-compute-storage1 | 128 | 40 | 0 | | 1 | 1.0 | True |

+----+--------------------------+-----------+------+-----------+------+-------+-------------+-----------+

$ nova flavor-create m1.ceph-compute-storage2 9 128 40 1

+----+--------------------------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+--------------------------+-----------+------+-----------+------+-------+-------------+-----------+

| 9 | m1.ceph-compute-storage2 | 128 | 40 | 0 | | 1 | 1.0 | True |

+----+--------------------------+-----------+------+-----------+------+-------+-------------+-----------+

我们将聚合的特殊元数据分配给flavor,以便我们可以区分它们

$ nova flavor-key m1.ceph-compute-storage1 set aggregate_instance_extra_specs:cephcomputestorage1=true

$ nova flavor-key m1.ceph-compute-storage2 set aggregate_instance_extra_specs:cephcomputestorage2=true

IV. 准备测试!

我们的配置已经完成,让我们看看事情如何运作 :). 现在,我正在启动两个不同flavor的实例

$ nova boot --image 96ebf966-c7c3-4715-a536-a1eb8fc106df --flavor 8 --key-name admin ceph1

$ nova boot --image 96ebf966-c7c3-4715-a536-a1eb8fc106df --flavor 9 --key-name admin ceph2

$ nova list

+--------------------------------------+-------+--------+------------+-------------+------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-------+--------+------------+-------------+------------------+

| 79f7c0b6-6761-454d-9061-e5f143f02a0e | ceph1 | ACTIVE | - | Running | private=10.0.0.5 |

| f895d02e-84f8-4e30-8575-ef97e21a2554 | ceph2 | ACTIVE | - | Running | private=10.0.0.6 |

+--------------------------------------+-------+--------+------------+-------------+------------------+

它们似乎正在运行,现在我们验证实例是否已启动到各自的Ceph池中

$ sudo rbd -p vms ls

79f7c0b6-6761-454d-9061-e5f143f02a0e_disk

79f7c0b6-6761-454d-9061-e5f143f02a0e_disk.config

$ sudo rbd -p vms2 ls

f895d02e-84f8-4e30-8575-ef97e21a2554_disk

f895d02e-84f8-4e30-8575-ef97e21a2554_disk.config

完成了!

我非常喜欢这个设置,因为它非常灵活,目前有一件事不太清楚,那就是资源统计信息的行为。这将会变得棘手,因为它会简单地翻倍……这可能不是理想的,不幸的是,没有办法缓解它。